1.0 - Amortised Analysis

-

So far, we have analysed algorithms for “one-off” use.

-

However, we often used a data structure (object) for a purpose that involves many uses of its methods

- For example, the multiple calls to extract-min from a priority queue.

Dijkstra(G, w, s) // (G) Graph, (w) weight function, (s) source vertex init_single_source(G, s) S = ∅ // S is the set of visited vertices Q = G.V // Q is a priority queue, maintaining G.V - S while Q != ∅ u = extract_min(Q) S = S ∪ {u} for each vertex in G.adj[u] relax(u, v, w) -

We want to determine the worst-case time complexity of a series of operations, rather than just a single operation in isolation

-

Consider an object x with multiple operations

- The “worse” is worst-case

- You design an algorithm that uses object x’s method times

- Naively, the worst-case time complexity is

-

However, you may know that the worst-case cannot happen times in a row - in this case, how can you prove that the implementation is actually better then

-

Amortised analysis considered sequences of operations, typically that successfully modify a data structure

1.1 - Dynamic Table Example

Store an initially unknown number of elements in an array.

- Double the size of the array when it runs out of space

Typically, inserting an element into an array is constant time

- However, sometimes one must:

- Allocate a new, larger array (of twice the size)

- Copy all of the elements to the new array, and add the new element.

Suppose our array initially has size 1.

-

Inserting the first element into the array succeeds.

-

Inserting the second element into the array fails, so we must construct a new array, copy over the old elements + new element.

-

Following this, the insertion of the third element once again causes the array to over flow, and thus we must construct a new array, copying over the new elements.

-

After operations (of inserting a new elements) there are elements in the array

-

Inserting an element when the capacity is full (worst case for some ) is

-

Thus, inserting elements is ?

- No, it is still

-

We are analysing:

for 1 = 1..n insert(e_i) -

for some sequence of elements

-

The vast majority of insertions are constant time

-

How many are not, and what their cost are depend on

1.1.1 - Tighter Analysis on Dynamic Table

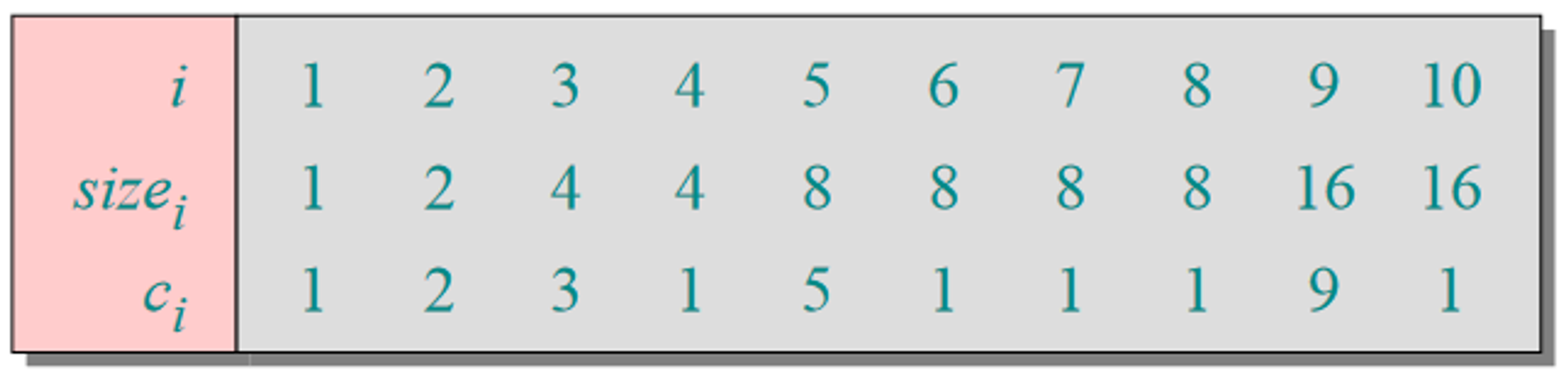

Let

From this, we get:

- Inserting the 1st, 4th, 6th, 7th, 8th, 10th element takes constant time as we have space

- Inserting the 2nd element has a relative cost of 2:

- Copy across existing element

- Copy across new element

- Inserting the 3rd element has a relative cost of 3:

- Copy across existing 2 elements

- Copy across new elements

1.1.2 - Dynamic Table: Aggregate Method

🌱 Develop a summation, and solve it for operations. Develop bound for that series of operations.

-

Inserting the 2nd, 3rd, 5th, 9th, … elements (when the array has size 1, 2, 4, 8, …) has an additional cost equal to the size of the array

-

In general, how many resizes are there in a sequence of insert operations, for ?

-

How much does the resize operation cost?

-

The cost of insertions is:

insertion operations, cost from resize operations, each costing

-

Thus, the average cost of each dynamic table operation is

1.2 - Stack Operations

-

Consider standard stack operations push and pop, and

multipop(S, k) // Assumes that S is not empty, and k > 0 while S is not empty and k > 0 pop(S) k = k - 1 -

Multipop(S, k) can be (where is the size of the stack) if

-

Hence, any sequence of stack operations must be

-

But can we prove a better bound?

-

Yes, here’s our intuition

-

Multipop will only iterate while the stack is not empty

-

Each element is pushed exactly once, and popped exactly once, hence after pushes, there can be at most pops.

1.2.1 - Aggregate Method

-

We want to determine the time complexity of stack operations, starting from an empty stack:

for i = 1..n Push(...) or Pop(...) or Multipop(...) -

Arguing that these operations are is clumsy using the aggregate method.

-

Consider the more sophisticated techniques:

- Accounting method - focus on the operations

- Potential method - Focus on the data structure

1.2.2 - Accounting Method

-

Begin the accounting method by calculating the actual cost of each operation

- Push: 1

- Pop: 1

- Multipop(S, k):

-

Assign an amortised cost to each method

- Push: 2

- Pop: 0

- Multipop(S, k): 0

-

For any sequence of stack operations, the amortised cost must be an upper bound on the actual cost

-

Then, one can use the amortised cost in place of the (more complicated) actual cost

-

In the above case, ever operation has a constant amortised cost, and hence a sequence of operations is

-

We must show that the total amortised cost minus the total actual cost is never negative

For all sequences of all possible lengths

Actual Cost Amortised Cost Push Pop Multipop(S, k) $k=\min(k, S -

Our intuition here is that the extra credit in the push operation pays for the later pop operations

- Have to PUSH an element for it to be Popped off later - so we encode this extra credit as part of the PUSH operation to be used later.

1.2.3 - Potential Method

-

Focus on the data structure, instead of the operations performed on the data structure.

-

Begin by determining the cost of each opeartion

-

Define a potential function, on the data structure

-

The amortised cost, of an operation is the actual cost plus the change in potential

-

For these amortised costs to be valid, we require that the amortised cost is an upper bound on the actual cost

- We can simplify this expression using the telescoping series.

-

Following on from this, the obligation is to show that after every operation (and thus )

- Consider applying the Potential method to the stack from before.

- Push and Pop both cost 1 operation

- Multipop costs

- let be the size of the stack

- Our change in potential, is modified by the size of the stack:

- Push: 1 (the size of the stack has increased by 1)

- Pop: -1 (The size of the stack has decreased by 1)

- Multipop: -k’ (The size of the stack has decreased by -k’)

- From this, our amortised cost is:

- Push: 2 (1 + 1)

- Pop: 0 (1 - 1)

- Multipop 0 (k’ - k’)

- Our obligation is to show which is trivial (as the potential function gives the size of the data structure)

- Therefore, all operations have constant amortised time